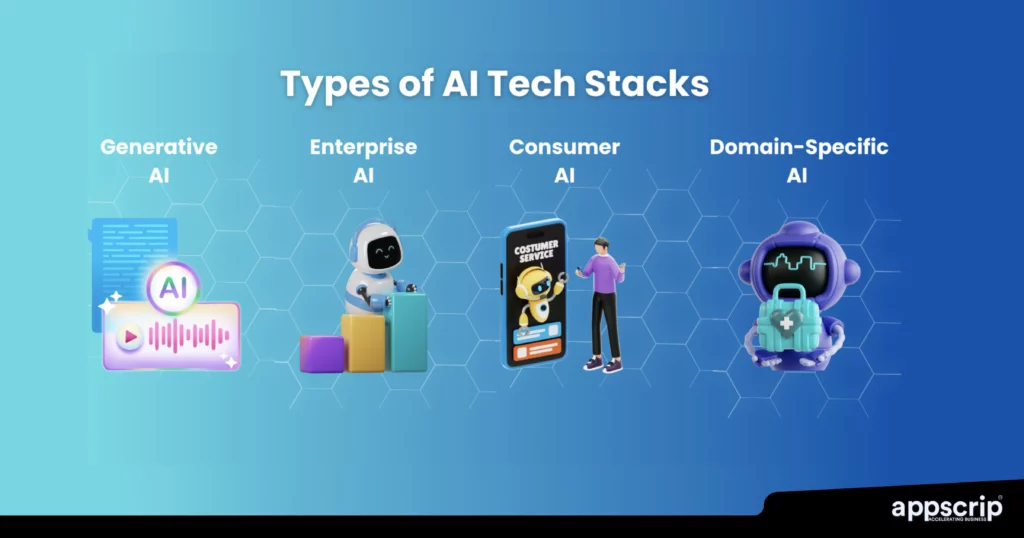

An AI tech stack is a set of infrastructure components, tools, frameworks, and technologies that work in tandem to develop, deploy, and manage AI and ML solutions.

As in other tech stacks, an AI stack too organizes the elements into layers that work together to enable an efficient and scalable AI implementation.

This process breaks down the complexity of building AI solutions into manageable components. Now teams can focus on individual aspects without losing sight of the final goal.

Each layer in the stack specifies a single function, from data handling to model deployment, identifying dependencies, allocating resources and addressing challenges systematically.

TL;DR

- AI Tech Stack Overview: An AI tech stack comprises infrastructure, tools, and technologies for developing and managing AI solutions.

- Core Components:

- Data Layer: Involves data collection, storage, and preprocessing.

- AI Frameworks & Libraries: Tools like TensorFlow, PyTorch, and Scikit-learn for building and training models.

- Model Development & Training: Environments like Jupyter Notebook, Google Colab, and Databricks.

- Compute Infrastructure: CPUs, GPUs, TPUs, and cloud solutions like AWS SageMaker, Google Cloud AI, and Azure ML.

- MLOps & Deployment: CI/CD pipelines, model versioning, and scaling tools like Docker and Kubernetes for efficient model management.

- Key Considerations:

- Project Requirements: Complexity, scalability, and domain-specific needs.

- Performance: Computational efficiency, inference speed, and resource utilization.

- Ecosystem Support: Active development, documentation, and community resources.

- Integration: Compatibility with existing infrastructure and support for various data sources.

- Cost Management: Balancing cloud vs. on-premise infrastructure and licensing costs.

- Flexibility: Support for multiple ML paradigms and adaptability to new techniques.

- Case Studies:

- Recommendation Engines: Netflix and Amazon use AI for personalized content and product recommendations.

- Healthcare Diagnostics: IBM Watson provides transparent, evidence-based treatment recommendations.

- Finance and Banking: AI for risk management, customer experience, and operational efficiency.

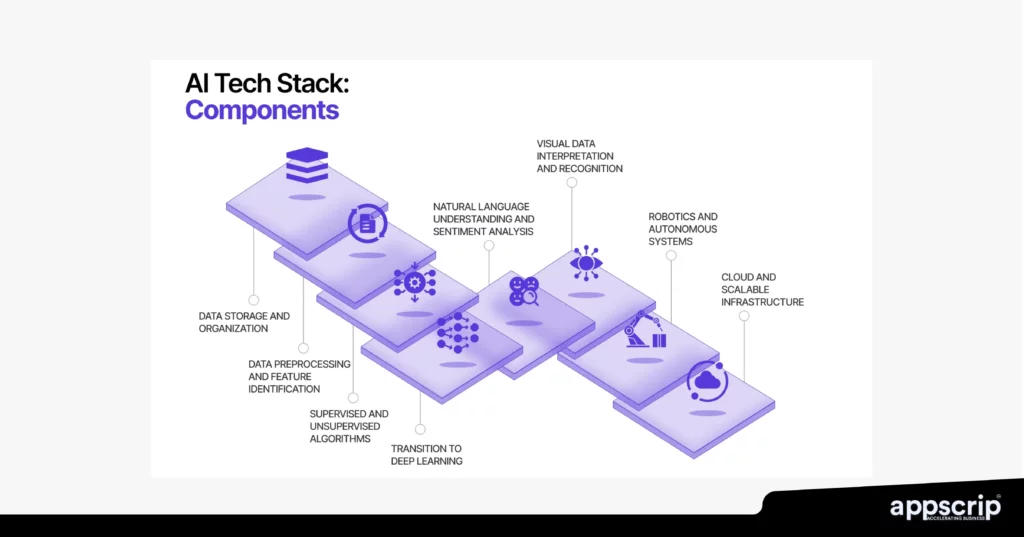

Core Components of an AI Tech Stack

Building an AI system is like assembling a high-tech machine, and every layer has got to jell together or it’s not going to function well. Let’s break down the core components for you.

Data Layer – Data Collection & Storage

This is where you gather and store the information your AI needs to learn and make decisions.

- Data Sources:

- Structured Data: Neat, organized info like spreadsheets (e.g., customer IDs, sales numbers). Great for clear predictions like sales trends.

- Unstructured Data: Jumbled, raw info like videos, social media posts, or audio clips. Helps with deeper insights, like understanding customer emotions like sentiment analysis.

- Most AI projects use both. For example, a store might combine sales data with TikTok reviews to predict what’s trending.

- Remember: Quality matters more than quantity. Bad data will always cause bad results.

- Databases & Data Lakes:

- SQL Databases (e.g., PostgreSQL, MySQL): Store structured data for quick searches, like “Who bought socks last week?”

- NoSQL Databases (e.g., MongoDB, Cassandra): Handle unstructured data, like random info from sensors or social media.

- Data Lakes (e.g., Hadoop, Snowflake): This usually stores everything in one big pool. Perfect for large-scale AI projects that need tons of data.

- Choose based on your needs and budget.

- Data Pipelines and ETL Processes:

- ETL (Extract, Transform, Load) is like a data cleaning and organizing system.

- It grabs data from sources (APIs, spreadsheets, etc.), cleans it (removes duplicates, fixes errors), and loads it into your system.

- Tools like Apache Airflow or AWS Glue automate this process, so your AI always has fresh, clean data to work with.

AI Frameworks & Libraries

These are the tools you use to build and train your AI models.

- Popular Frameworks:

- TensorFlow: Great for big, complex projects (e.g., self-driving cars). It’s robust and production-ready.

- PyTorch: Flexible and easy to use, especially for research and prototyping (e.g., chatbots).

- Scikit-learn: Simple and lightweight, perfect for smaller tasks like predicting customer behaviour.

- All are free and open-source, with active communities to help you out.

- When to Choose Which Framework:

- Use TensorFlow if you’re building something big and scaling is imperative.

- Use PyTorch if you are trying out stuff and need flexibility.

- Use Scikit-learn for simple tasks that isn’t too complex.

| Framework | Pros | Cons | Best Use Cases |

| TensorFlow | Flexible, scalable, extensive community support | Steep learning curve | Large-scale deep learning and production models |

| PyTorch | Intuitive syntax, dynamic computation graph, excellent for research | Less mature for large-scale production | Research, prototyping, and dynamic models |

| Scikit-learn | Simple, great for classical ML, excellent documentation | Limited support for deep learning, not scalable | Small to medium ML projects, traditional ML tasks |

Model Development & Training

This is where you create and teach your AI model.

- Development Environments:

- Jupyter Notebook: A whiteboard for writing and testing code. Just right for solo work.

- Google Colab: Free, cloud-based tool with built-in GPUs for training models. Built for beginners or small projects.

- Databricks: A collaborative platform for teams working on big projects.

- Training vs. Fine-Tuning:

- Training from Scratch: Building a custom model from the ground up usually takes time and lots of data.

- Fine-Tuning: Tweaking a pre-trained model to fit your needs. Faster and easier.

- Example: A payment gateway might fine-tune a fraud detection model instead of building one from scratch.

- Hyperparameter Tuning Tools:

- Hyperparameters are like the “settings” for your AI model.

- Tools like Optuna or Keras Tuner help you find the best settings to improve accuracy.

- Don’t delay small improvements, as it can save a lot of money like identifying more fraud.

Compute Infrastructure & Hardware

This is what powers your AI system.

- CPUs vs. GPUs vs. TPUs:

- CPUs: These work best for simple tasks but may not be able to handle heavy AI work.

- GPUs: Faster and better for training complex models.

- TPUs: Super-fast, designed for massive AI tasks like Google’s AI projects.

- Choose based on your budget and needs.

- Cloud AI Solutions:

- AWS SageMaker, Google Cloud AI, and Azure ML are cloud platforms that provide tools for building, training, and deploying AI models.

- The best advantage, these are economical because you pay as per your usage.

- On-Premise vs. Cloud:

- On-Premise: You invest on the hardware. Expensive set up but good for sensitive data.

- Cloud: Rent computing power as needed. Flexible and scalable.

- Many companies use a mix of both (hybrid approach).

MLOps & Model Deployment

This is how you go about managing and running your AI models.

- CI/CD Pipelines for AI:

- Continuous Integration/Deployment (CI/CD) keeps your models updated and bug-free.

- It is best to automate the process using tools like Jenkins or GitHub Actions.

- Example: Payment gateways usually update their fraud detection model regularly.

- Model Versioning and Monitoring Tools:

- Track changes and performance using tools like MLflow and Kubeflow.

- Example: If the new version of your model doesn’t perform as expected, you can roll back to the previous one.

- Scaling AI Models in Production:

- Use tools like Docker and Kubernetes to run your models efficiently, even during high traffic (e.g., Black Friday sales).

- Start small and scale up as your business grows.

Key Considerations When Selecting an AI Tech Stack

1. Project Requirements

- What’s the complexity of AI/ML models

- The scalability requirements

- Is it for any specific domain or has industry level constraints

2. Performance Considerations

- The computational efficiency needed

- What should be the inference speed

- The resource utilization

- What should be the support for GPU/distributed computing

3. Ecosystem and Community Support

- Is it under active development

- Comprehensive documentation

- The community resources and forums available

- Long-term maintainability

4. Integration Capabilities

- Compatibility needs with existing infrastructure

- Ease of integration with other tools

- How much should it support various data sources and formats

5. Cost and Resource Management

- Cloud vs. on-premises infrastructure

- Licensing costs

- Computational resource expenses

6. Flexibility and Extensibility

- Support for multiple ML paradigms

- Ability to experiment with new techniques

- Adaptability to changing technological landscapes

The tech stack is not a one-size-fits-all solution but requires careful evaluation based on specific project needs and organizational constraints.

Security & Compliance in AI Tech Stack

As AI adoption grows, ensuring security, compliance, and ethical considerations in AI systems gets precedence. Organizations need to implement strong safeguards to protect sensitive data, prevent unauthorized access, and ensure AI models operate fairly and transparently.

Data Privacy & Compliance (GDPR, HIPAA, CCPA)

AI systems process vast amounts of personal and sensitive data, making regulatory compliance essential. Key regulations include:

1. General Data Protection Regulation (GDPR – Europe)

- Advocates minimal data collection and usage of only necessary information.

- User consent is key before processing personal data.

- Demands users have the right for data deletion or the “right to be forgotten”.

- AI models need to provide explanation for automated decision-making (e.g., for loan approvals).

2. Health Insurance Portability and Accountability Act (HIPAA – U.S.)

- Applies to AI systems handling healthcare and patient data.

- Requires encryption and strict access control of medical records.

- Ensures AI-driven diagnostics and predictive analytics comply with medical privacy laws.

3. California Consumer Privacy Act (CCPA – U.S.)

- Consumers should possess the right to access, delete, or opt-out of AI-based data processing.

- Ensures transparency in AI models collecting consumer behaviour data.

- Businesses to communicate AI-driven decision-making that affects user rights.

Security Best Practices for AI Models

AI models are susceptible to various cyber threats, including adversarial attacks, model theft, and data poisoning. This is how you go about securing them:

1. Secure AI Training & Data Handling

- Encrypt datasets and implement role-based access control (RBAC).

- Use differential privacy techniques to avoid user re-identification.

- Detects data poisoning attacks, where there’s manipulation of training data.

2. Model Protection Against Attacks

- Implement adversarial defence techniques to safeguard AI from manipulated inputs.

- Use homomorphic encryption for secure AI model inference.

- Store models in secure containers with restricted API access.

3. Secure Deployment & Monitoring

- Utilize secure multi-party computation (SMPC) to allow AI to process encrypted data.

- Deploy AI monitoring systems to detect anomalies or biases in real-time.

- Regularly update AI models to patch vulnerabilities.

Best Practices for AI Security:

✅ Encrypt both data and AI models using end-to-end encryption.

✅ Implement access controls to restrict unauthorized model interactions.

✅ Conduct penetration testing to identify and fix security gaps.

✅ Use model explainability tools to detect unintended biases and errors.

Ethical AI Considerations

Ensuring AI operates ethically is crucial to gaining public trust and preventing harmful consequences. Ethical AI revolves around fairness, transparency, and accountability.

1. Bias & Fairness in AI Models

- AI can inherit biases from training data, leading to discriminatory decisions.

- Organizations must use fairness metrics to detect and mitigate bias (e.g., Equalized Odds, Demographic Parity).

- Regular audits can ensure AI doesn’t reinforce gender, racial, or economic inequalities.

2. Explainability & Transparency

- AI “black boxes” can lead to unjustified decisions without human understanding.

- Techniques like SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-agnostic Explanations) improve model explainability.

- Transparency ensures users understand how AI makes decisions (e.g., credit scoring, hiring algorithms).

3. Accountability & Human Oversight

- AI systems must have clear accountability frameworks in place.

- Automated decisions should be reviewable by humans, especially in high-stakes areas like healthcare and criminal justice.

- Establishing ethical AI committees within organizations can help oversee AI deployment.

Best Practices for Ethical AI:

✅ Use diverse datasets to train unbiased models.

✅ Make AI decisions interpretable and explainable to stakeholders.

✅ Ensure human-in-the-loop mechanisms for critical AI decisions.

✅ Follow global AI ethics frameworks like UNESCO’s AI Ethics Guidelines.

Case Studies & Real-World Examples

AI-Powered Recommendation Engines

Netflix Recommendation System

- Personalization Algorithm

- Analyses viewing history

- Considers user interactions

- Predicts content preferences

- Key Features

- Collaborative filtering

- Content-based recommendations

- Real-time adaptation to user behaviour

Amazon Product Recommendations

- Multi-dimensional Analysis

- Purchase history

- Browsing patterns

- Similar user preferences

- Advanced Techniques

- Machine learning clustering

- Predictive modelling

- Contextual recommendation

Healthcare Diagnostics

IBM Watson for Oncology

- Provides treatment recommendations

- Explains reasoning behind suggestions

- Transparent decision-making process

- Assists doctors with evidence-based insights

Ethical Considerations

- Bias Detection

- Algorithmic Transparency

- Fair and Accountable AI Systems

AI in Healthcare

- Diagnostic Capabilities

- Advanced medical imaging analysis

- Early disease detection

- Personalized treatment recommendations

- Predictive Analytics

- Patient risk assessment

- Resource allocation optimization

- Epidemic and outbreak prediction

- Emerging Technologies

- AI-powered robotic surgery

- Precision medicine

- Mental health monitoring

AI-Driven Automation in Finance and Banking

- Risk Management

- Fraud detection

- Credit scoring

- Algorithmic trading

- Customer Experience

- Personalized financial advice

- Chatbots and virtual assistants

- Automated customer support

- Operational Efficiency

- Process automation

- Regulatory compliance monitoring

- Real-time financial reporting

Conclusion

AI will be the preferred technology in many domains due to its ability to create intelligent, efficient, and trustworthy systems. And these systems will be able to operate with minimal human intervention while maintaining transparency and upholding individual privacy.

The AI tech stack is not to be considered as merely a technological toolkit, but it’s a strategic framework that empowers organizations to innovate, compete, and transform. As AI continues to its march, it is vital to understand its intricate behaviour to bring in further meaningful technological advancement.