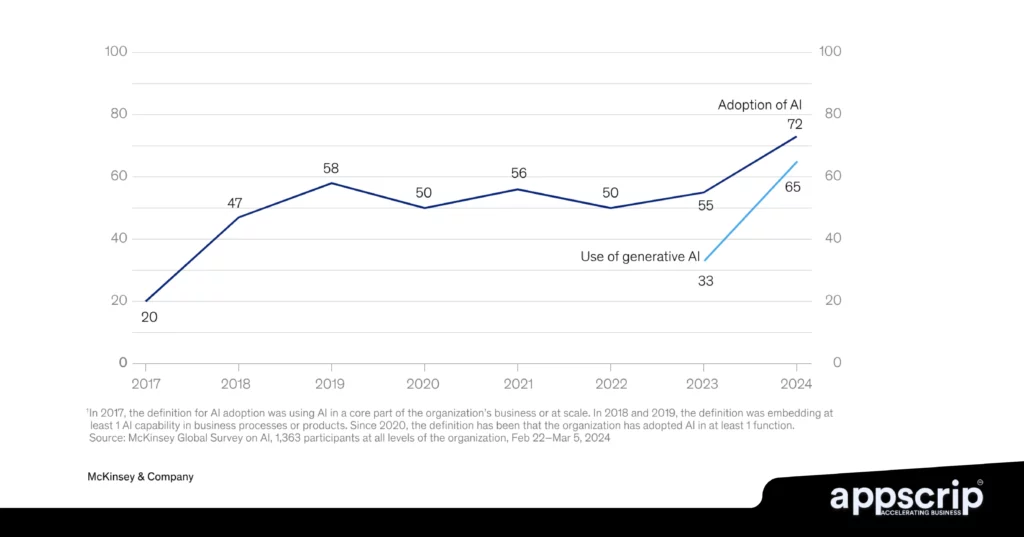

Enterprises aim to use technology to their advantage. With advances in the AI landscape, a build-first approach has given way to the pragmatic implementation focused strategy or seamless AI integration.

Organizations aren’t keen on training models from scratch, but focus on effectively harnessing existing AI technologies to solve business problems. This paradigm shift encourages integration, orchestration, and responsible deployment of third-party AI services.

TL;DR

Enterprises are shifting from building AI models from scratch to utilise existing AI technologies for solving business shortfalls.

- API Integration Layer: Crucial for apps like Uber, it ensures seamless communication between services. Key components include:

- API Management Platforms: Tools like Apigee, AWS API Gateway, and Kong for scalability, security, and performance.

- Security Best Practices: OAuth 2.0, JWT, TLS encryption, and rate limiting to protect sensitive data.

- Error Handling: Fallback mechanisms like retries, circuit breakers, and careful degradation for smooth experiences.

- Multimodal AI: Combines text, images, audio, and video for richer insights. Tools like OpenAI’s CLIP, DALL·E, and Whisper enable cross-modal reasoning. There are challenges to boot such as data alignment, compute demands, and real-time processing.

- Guardrail Technologies: Frameworks like LangChain, LlamaGuard, and NeMo Guardrails ensure ethical and safe AI usage. Techniques adopted include content filtering, input/output validation, and prompt injection prevention.

- Orchestration and Workflow Management: Tools like LangChain, Kubeflow, and Apache Airflow streamline AI workflows, enabling linking of multiple AI services and retrieval-augmented generation (RAG) for correct, exact, contextual responses.

- Implementation Patterns: Hybrid systems, human-in-the-loop designs, and event-driven architectures enhance AI reliability and adaptability.

API Integration Layer in a Ride-Hailing App Like Uber

API integration layer is key for a ride-hailing app like Uber, ensuring seamless communication between different services, third-party providers, and internal systems. Here’s a breakdown of key components:

API Management Platforms and Gateways

APIs in a ride-hailing app must be managed efficiently to ensure scalability, security, and performance. API management platforms and API gateways help achieve this by handling requests, monitoring usage, and enforcing security policies.

🔹 Common API Management Platforms:

- Apigee (by Google Cloud): Provides analytics, security, and monetization features.

- AWS API Gateway: Handles large request volumes by scaling and integrates with AWS services.

- Kong API Gateway: Open-source, lightweight, and offers high-performance routing.

- Mulesoft Anypoint Platform: Enterprise-level API management with strong integration capabilities.

🔹 Role of API Gateways in a Ride-Hailing App:

- Traffic control: Manages API requests from users, drivers, and partners.

- Security enforcement: Implements authentication, authorization, and encryption.

- Load balancing: Ensures stability by distributing traffic evenly across services.

- Protocol translation: Converts different formats (REST, gRPC, WebSockets) for compatibility.

Authentication and Security Best Practices

Security is critical in API integration, especially when handling sensitive user data, payments, and ride transactions.

🔹 Best Practices for API Security:

- OAuth 2.0 + OpenID Connect for secure user authentication.

- API keys & JWT (JSON Web Tokens) for token-based authorization.

- TLS encryption (HTTPS only) to prevent data interception.

- Role-Based Access Control (RBAC) to restrict API permissions based on user roles.

- Input validation & sanitization to prevent SQL injection and cross-site scripting (XSS).

- Logging & monitoring tools (Datadog, Splunk) to detect anomalies in API usage.

Rate Limiting and Quota Management

To prevent API overuse and ensure smooth operation, implementing rate limiting and quota management is essential.

🔹 Why Rate Limiting Matters in a Ride-Hailing App:

- Protects against DDoS attacks and excessive API calls.

- Ensures fair usage across all users.

- Helps manage server load efficiently.

🔹 Common Rate Limiting Strategies:

- Token Bucket Algorithm: Allows bursts of requests but ensures overall rate control.

- Leaky Bucket Algorithm: Processes requests at a fixed rate, preventing overload.

- Fixed Window & Sliding Window Algorithms: Defines request limits per time frame (e.g., 100 requests/min).

🔹 Quota Management:

- User-based quotas: Limits API calls per user (sometimes a driver app may have different limits compared to a passenger app).

- Tiered access: Premium users get higher API call limits compared to free-tier users.

- Retry-after headers: Notifies when users exceed the quota and communicates when to retry.

Error Handling and Fallback Strategies

Handling errors efficiently ensures a smooth user experience, even when API failures occur.

🔹 Best Practices for API Error Handling:

- Standardized HTTP status codes:

- 200 OK – Success

- 400 Bad Request – Invalid input

- 401 Unauthorized – Authentication failure

- 429 Too Many Requests – Rate limit exceeded

- 500 Internal Server Error – Server-side issue

- Detailed error messages: Provide actionable insights, like Invalid payment method, try another method.

- Fallback mechanisms:

- Retry with backoff: Implement exponential backoff when retrying failed API requests.

- Circuit breakers: Temporarily disable failing services to prevent cascading failures.

- Graceful degradation: When GPS fails, showing the last known location rather than crashing the app.

A well-designed API integration layer is imperative to the efficient working of a ride-hailing app. It ensures flawless communication between users, drivers, payment processors, and external services. Enabling a strong API management, security measures, rate limiting, and error handling, businesses can create a reliable and scalable platform.

Multimodal Context Processing (MCP)

Overview of Multimodal AI Capabilities

AI models that can process and integrate information from multiple data types like text, images, audio, and video to enhance understanding and reasoning is called multimodal AI. Don’t confuse this unimodal AI (which relies on a single data format). Multimodal AI allows:

- Cross-domain learning: Linking text with images for better comprehension.

- Enhanced perception: Combining speech recognition with visual cues to enhance accuracy.

- Rich contextual understanding: Analysing body language, tone, and spoken words cohesively.

🔹 Multimodal AI Applications:

✅ AI chatbots that process text & voice commands (e.g., ChatGPT with voice).

✅ Smart assistants that use vision & audio (e.g., Google Assistant).

✅ Healthcare AI that analyzes MRI scans + doctor’s notes.

✅ Autonomous vehicles using LIDAR, cameras, & sound sensors.

Tools for Processing & Preparing Multimodal Inputs

AI developers use specialized tools to handle multimodal data so as to process, align, and merge different formats.

1. Data Processing & Annotation Tools

🔹 Labelbox – AI-assisted data annotation for text, images, and video.

🔹 Amazon SageMaker Ground Truth – Automated multimodal data labeling.

🔹 SuperAnnotate – Image and video annotation with AI-driven workflows.

2. Preprocessing & Transformation Libraries

🔹 OpenCV – Image/video preprocessing for AI models.

🔹 Librosa – Audio signal processing for deep learning.

🔹 NLTK / SpaCy – Natural language processing (NLP) for text.

3. Multimodal Dataset Sources

🔹 COCO (Common Objects in Context) – Image + text caption dataset.

🔹 AudioSet (Google) – Large-scale dataset with audio event annotations.

🔹 HowTo100M – Video dataset with text descriptions for cross-modal learning.

Cross-Modal Reasoning & Understanding Technologies

Cross-modal reasoning enables AI models to connect concepts across different data types. This helps understand a spoken phrase and identify related images.

1. Transformer-Based Multimodal Models

🔹 CLIP (OpenAI) – Matches images with text descriptions.

🔹 DALL·E – Generates images from textual descriptions.

🔹 BLIP (Bootstrapped Language-Image Pretraining) – Joint vision-language modeling.

2. Speech & Audio Understanding

🔹 Whisper (OpenAI) – Automatic speech recognition (ASR).

🔹 Wav2Vec 2.0 (Facebook AI) – Self-supervised audio-text training.

3. Video-Language Processing

🔹 VideoBERT – Processes video + text for action recognition.

🔹 X-CLIP – Extends CLIP for video understanding.

🔹 Example: AI-powered video summarization, where a system transcribes speech, detects objects in frames, and extracts key events in a text format (like summary).

Multimodal Embedding Strategies & Techniques

Multimodal embeddings create a shared representation space for different data types (e.g., mapping text and images into the same vector space).

1. Early Fusion vs. Late Fusion Approaches

- Early Fusion – Combines raw inputs before feeding them into the model as in merging audio and video before analysis.

- Late Fusion – Processes each modality separately, then combines results. NLP and computer vision models run independently before merging outputs.

2. Common Embedding Techniques

🔹 Joint Embedding – Projects different modalities into the same latent space (e.g., CLIP’s shared vision-text embeddings).

🔹 Cross-Attention Mechanisms – Allows models to focus on important parts of different modalities (e.g., in vision-language tasks).

🔹 Graph Neural Networks (GNNs) – Uses graph structures to link multimodal information (e.g., connecting people in video clips with speech transcripts).

🔹 Example: AI providing captions for a video, where the model learns speech patterns and visual scenes to generate relevant captions.

Integration Challenges with Multimodal AI Services

Multimodal AI comes with challenges even though expectations run high, especially when combining with real-world applications:

1. Data Alignment Issues – Text, images, and audio need precise synchronization like matching spoken words to video frames.

2. Compute & Storage Demands – The GPU power to process large-scale multimodal models are high.

3. Model Complexity – Training multimodal AI models are more complex than a single-modality model.

4. Bias & Fairness – AI models can inherit biases from multimodal datasets like gender bias in image recognition.

5. Real-Time Processing – Handling live multimodal data like smart assistants processing voice and facial expressions demand low-latency optimizations.

🔹 Example: Using edge AI like TensorFlow Lite, ONNX for on-device processing instead of cloud-based multimodal inference.

Handling Multimodal Responses & Outputs

After processing multimodal data AI systems must generate responses to align across different modalities.

1. Output Representation Formats

✔ Text-based responses: Like chatbot responses.

✔ Audio feedback: Like virtual assistants reading messages aloud.

✔ Video synthesis: Like AI-generated animations based on script inputs.

2. AI Models for Multimodal Output Generation

🔹 DeepMind’s Flamingo – Generates descriptions of images and videos.

🔹 OpenAI’s GPT-4V – Can understand and respond to images & text inputs.

🔹 Synthesia – AI-driven video avatars that generate talking-head videos from text scripts.

🔹 Example: AI news summary system that generates text summaries, speech narration, and video highlights from a news event.

Guardrail Technologies

AI Safety and Control Frameworks

Guardrail technologies ensure AI models operate within ethical, legal, and contextual constraints. Some notable frameworks include:

- LangChain – A powerful framework for building applications with LLMs by managing prompts, memory, and chaining AI components. It helps enforce safety measures like access control and structured outputs.

- LlamaGuard – An open-source AI safety tool developed to enforce responsible AI usage. It ensures LLMs follow predefined safety policies.

- NeMo Guardrails – A conversational AI framework by NVIDIA that defines AI behavior rules, such as restricting toxic content, managing hallucinations, and enforcing compliance with enterprise guidelines.

Content Filtering and Moderation Systems

AI-driven content moderation systems filter harmful, offensive, or inappropriate content before it reaches users. Techniques include:

- Keyword Filtering – Identifying harmful words and phrases.

- Sentiment Analysis – Detecting aggressive, toxic, or harmful speech.

- Machine Learning-based Classification – Training models to distinguish between acceptable and inappropriate content (e.g., OpenAI’s Moderation API).

- Human-in-the-loop Moderation – AI-assisted content moderation with human oversight.

Input/Output Validation Mechanisms

To prevent unintended behavior, AI applications must validate both inputs and outputs. Common mechanisms include:

- Schema Validation – Ensuring inputs follow expected formats (e.g., JSON schema enforcement).

- Sanitization & Escaping – Removing harmful elements (e.g., stripping out harmful HTML, SQL injection protection).

- Context Awareness Checks – AI models validate responses based on defined rules to avoid hallucinations.

Prompt Injection Prevention Techniques

Prompt injection attacks manipulate AI-generated responses. Techniques to prevent them include:

- Strict Prompt Templates – Using well-defined, structured prompts to limit AI’s flexibility.

- Input Escaping & Parsing – Preventing malicious prompt overrides by sanitizing user inputs.

- AI Response Post-processing – Filtering and validating AI responses before delivering them to users.

- User Privilege Segmentation – Restricting AI behaviors based on user roles to prevent prompt exploitation.

Orchestration and Workflow Management

AI Service Orchestration Tools

AI service orchestration manages and connects multiple AI models or APIs efficiently. Some key tools include:

- LangChain – Manages multi-step AI workflows and integrates various LLMs, memory, and tools.

- LLamaIndex – Efficiently retrieves, indexes, and structures AI-powered information retrieval.

- NVIDIA NeMo – Used for multi-modal AI orchestration and large-scale conversational AI.

- Kubeflow – A cloud-native orchestration platform for ML workflows.

Workflow Management Systems

Workflow management ensures AI development services execute in a structured, efficient manner. Notable systems include:

- Apache Airflow – Open-source workflow automation tool that schedules AI tasks.

- Prefect – A Python-based workflow management system for data pipelines.

- Temporal.io – A scalable workflow orchestration engine for AI/ML applications.

Prompt Management and Optimization

Optimizing prompt design ensures efficient AI responses. Key techniques include:

- Dynamic Prompting – Adjusting prompts based on user interactions and context.

- Retrieval-Augmented Generation (RAG) – Fetching relevant data dynamically to improve AI responses.

- Fine-Tuning and Few-Shot Learning – Improving AI outputs with customized datasets and examples.

- Prompt Chaining – Breaking down complex requests into structured sub-prompts.

Chaining Multiple AI Services Together

For complex applications, chaining multiple AI models allows for better processing and response generation. Approaches include:

- Sequential Chaining – One AI model processes data and passes it to another.

- Parallel Processing – Multiple AI models analyse the same input simultaneously for enhanced accuracy.

- Decision Trees and Routing – AI dynamically selects the best model or service for a given task.

- API Orchestration – Combining LLMs, retrieval systems, and automation tools in a cohesive pipeline.

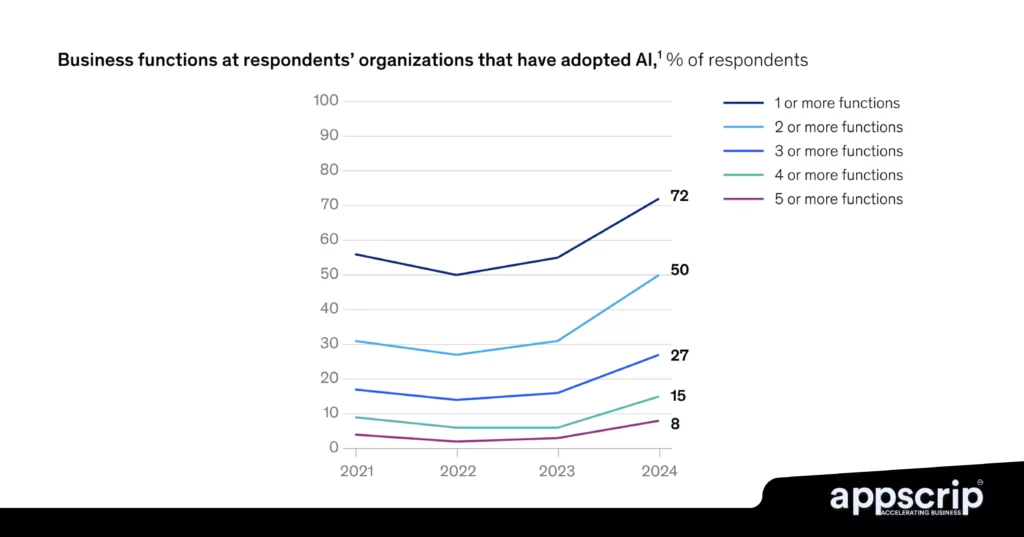

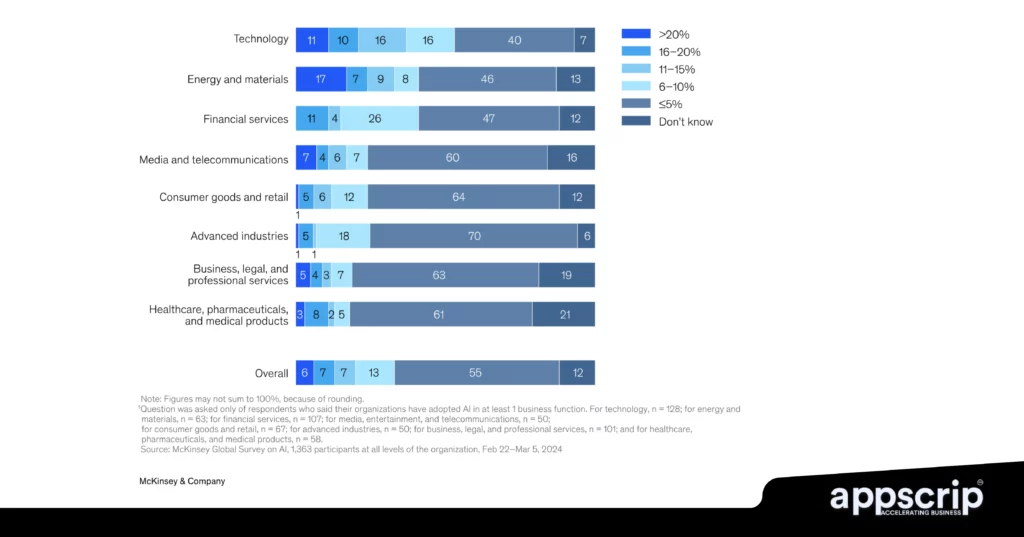

Organisations likely to invest more than 5% of the digital budgets on Gen AI and Analytical AI

Retrieval and Context Augmentation

Retrieval and context augmentation enhance AI models by providing relevant external knowledge, ensuring responses are accurate and context-aware.

Vector Database Solutions

Vector databases store and retrieve high-dimensional embeddings, enabling efficient similarity searches for information retrieval.

- Pinecone: A managed vector database optimized for AI applications, offering fast and scalable search with automatic indexing. Ideal for RAG (Retrieval-Augmented Generation) setups.

- Weaviate: Open-source and extensible, supporting hybrid search (vector + keyword) and semantic relationships. Features built-in classification and knowledge graph support.

- Chroma: A lightweight, developer-friendly vector database designed for embedding-based retrieval. Often used in AI chatbots and personalization systems.

Semantic Search Implementation

Semantic search goes beyond keyword matching, understanding intent and contextual meaning.

- Techniques: TF-IDF, BM25, Dense Retrieval (using transformers like BERT), and hybrid search (combining keyword + vector search).

- Applications: AI assistants, recommendation engines, and document search.

Embedding Models and Services

Embeddings convert text into numerical representations for similarity comparison.

- Models: OpenAI’s text-embedding-ada-002, Cohere’s embeddings, SBERT (Sentence-BERT), and Google’s Universal Sentence Encoder.

- Services: OpenAI Embeddings API, Hugging Face models, and self-hosted solutions with Faiss.

Knowledge Graph Integration

Knowledge graphs provide structured data relationships, improving reasoning and retrieval.

- Graph Databases: Neo4j, Amazon Neptune.

- Use Cases: AI assistants (e.g., combining retrieval + reasoning), fraud detection, enterprise search.

Implementation Patterns

Retrieval-Augmented Generation (RAG) Architectures

- Combines retrieval like vector search with generation such as GPT models.

- Helps LLMs ground responses in factual data, reducing illusions.

- Common pipeline:

- Convert user query to an embedding.

- Retrieve relevant documents from a vector database.

- Concatenate documents with the query.

- Pass to an LLM for response generation.

Hybrid Systems Combining Multiple AI Services

- Example: Using semantic search + knowledge graphs + LLMs for advanced QA systems.

- Multi-agent collaboration: Combining different AI agents for tasks (e.g., retrieval agent + reasoning agent).

- Cloud-based orchestration: Using services like LangChain or LlamaIndex to integrate AI components.

Human-in-the-Loop Implementations

- Enhances AI with human oversight for high-stakes applications for example in legal, healthcare, etc.

- Examples:

- AI suggests responses, humans validate.

- AI flags uncertain results for human review.

- Reinforcement learning from human feedback (RLHF).

Event-Driven AI Architectures

- AI systems react to real-time events instead of batch processing.

- Use Cases:

- Fraud detection: AI triggers an alert when suspicious transactions occur.

- Customer support automation: AI analyzes user sentiment and escalates cases.

- IoT + AI: AI models process sensor data for predictive maintenance.

- Implementation:

- Event-driven frameworks: Kafka, AWS Lambda.

- Combining AI with event streams for adaptive decision-making.

Conclusion: Seamless AI Integration

Building an effective enterprise AI stack isn’t about building from the scratch, but carefully selecting and integrating the right components as per needs. The makings of any successful AI implementation include robust API management, streamlined workflows, comprehensive guardrails, and effective multimodal processing capabilities.

The above elements work together to create a system that utilises powerful AI technologies while maintaining control, reliability, and security.